编程作业——初始化参数w

对w初始化用 np.random.randn(shape) *

导包、加载数据

1 | import numpy as np |

1 | def model(X, Y, learning_rate = 0.01, num_iterations = 15000, print_cost = True, initialization = "he"): |

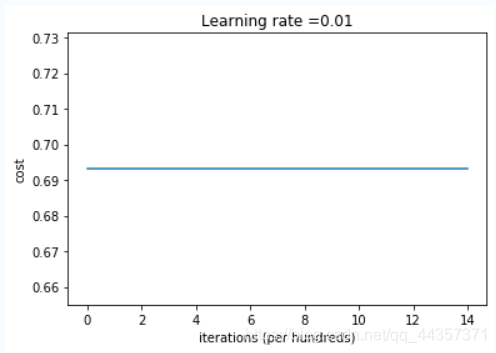

Zero initialization

1 | def initialize_parameters_zeros(layers_dims): |

1 | parameters = model(train_X, train_Y, initialization = "zeros") |

Cost after iteration 0: 0.6931471805599453

Cost after iteration 1000: 0.6931471805599453

Cost after iteration 2000: 0.6931471805599453

Cost after iteration 3000: 0.6931471805599453

Cost after iteration 4000: 0.6931471805599453

Cost after iteration 5000: 0.6931471805599453

Cost after iteration 6000: 0.6931471805599453

Cost after iteration 7000: 0.6931471805599453

Cost after iteration 8000: 0.6931471805599453

Cost after iteration 9000: 0.6931471805599453

Cost after iteration 10000: 0.6931471805599455

Cost after iteration 11000: 0.6931471805599453

Cost after iteration 12000: 0.6931471805599453

Cost after iteration 13000: 0.6931471805599453

Cost after iteration 14000: 0.6931471805599453

On the train set:

Accuracy: 0.5

On the test set:

Accuracy: 0.51 | plt.title("Model with Zeros initialization") |

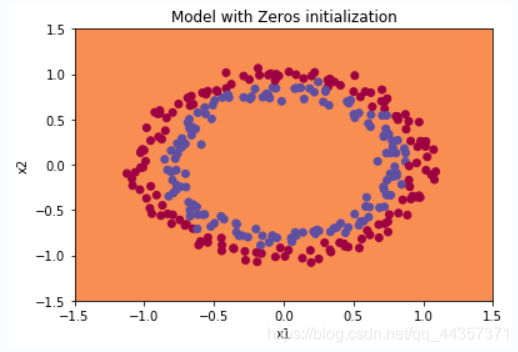

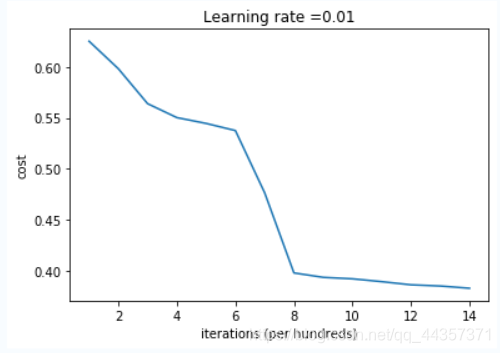

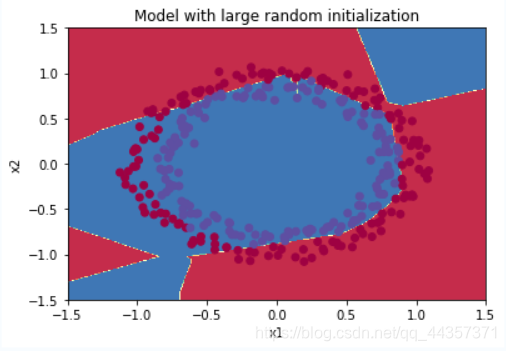

random initialization

1 | def initialize_parameters_random(layers_dims): |

1 | parameters = model(train_X, train_Y, initialization = "random") |

Cost after iteration 0: inf

Cost after iteration 1000: 0.6250982793959966

Cost after iteration 2000: 0.5981216596703697

Cost after iteration 3000: 0.5638417572298645

Cost after iteration 4000: 0.5501703049199763

Cost after iteration 5000: 0.5444632909664456

Cost after iteration 6000: 0.5374513807000807

Cost after iteration 7000: 0.4764042074074983

Cost after iteration 8000: 0.39781492295092263

Cost after iteration 9000: 0.3934764028765484

Cost after iteration 10000: 0.3920295461882659

Cost after iteration 11000: 0.38924598135108

Cost after iteration 12000: 0.3861547485712325

Cost after iteration 13000: 0.384984728909703

Cost after iteration 14000: 0.3827828308349524

On the train set:

Accuracy: 0.83

On the test set:

Accuracy: 0.861 | plt.title("Model with large random initialization") |

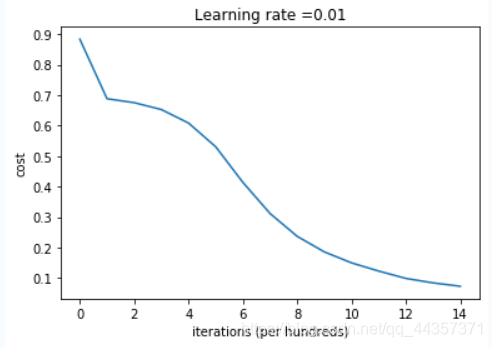

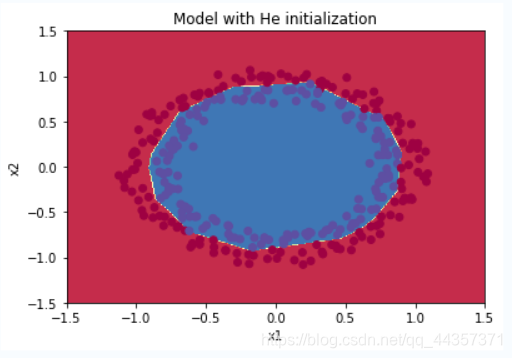

He initialization

This function is similar to the previous initialize_parameters_random(...). The only difference is that instead of multiplying np.random.randn(..,..) by 10, you will multiply it by $\sqrt{\frac{2}{\text{dimension of the previous layer}}}$, which is what He initialization recommends for layers with a ReLU activation.

1 | def initialize_parameters_he(layers_dims): |

1 | parameters = model(train_X, train_Y, initialization = "he") |

Cost after iteration 0: 0.8830537463419761

Cost after iteration 1000: 0.6879825919728063

Cost after iteration 2000: 0.6751286264523371

Cost after iteration 3000: 0.6526117768893807

Cost after iteration 4000: 0.6082958970572937

Cost after iteration 5000: 0.5304944491717495

Cost after iteration 6000: 0.4138645817071793

Cost after iteration 7000: 0.3117803464844441

Cost after iteration 8000: 0.23696215330322556

Cost after iteration 9000: 0.18597287209206828

Cost after iteration 10000: 0.15015556280371808

Cost after iteration 11000: 0.12325079292273548

Cost after iteration 12000: 0.09917746546525937

Cost after iteration 13000: 0.08457055954024274

Cost after iteration 14000: 0.07357895962677366

On the train set:

Accuracy: 0.9933333333333333

On the test set:

Accuracy: 0.961 | plt.title("Model with He initialization") |

Conclusions

| **Model** | **Train accuracy** | **Problem/Comment** | 3-layer NN with zeros initialization | 50% | fails to break symmetry |

| 3-layer NN with large random initialization | 83% | too large weights |

| 3-layer NN with He initialization | 99% | recommended method |

转载请注明来源,欢迎对文章中的引用来源进行考证,欢迎指出任何有错误或不够清晰的表达。可以在下面评论区评论,也可以邮件至 2470290795@qq.com

文章标题:编程作业——初始化参数w

文章字数:1k

本文作者:runze

发布时间:2020-02-16, 09:03:51

最后更新:2020-02-23, 08:30:52

原始链接:http://yoursite.com/2020/02/16/%E5%90%B4%E6%81%A9%E8%BE%BE%20%E6%B7%B1%E5%BA%A6%E5%AD%A6%E4%B9%A0/02%E6%94%B9%E5%96%84%E6%B7%B1%E5%B1%82%E7%A5%9E%E7%BB%8F%E7%BD%91%E7%BB%9C%EF%BC%9A%E8%B6%85%E5%8F%82%E6%95%B0%E8%B0%83%E8%AF%95%E3%80%81%E6%AD%A3%E5%88%99%E5%8C%96%E4%BB%A5%E5%8F%8A%E4%BC%98%E5%8C%96/%E7%BC%96%E7%A8%8B%E4%BD%9C%E4%B8%9A%E2%80%94%E2%80%94%E5%88%9D%E5%A7%8B%E5%8C%96%E5%8F%82%E6%95%B0w/版权声明: "署名-非商用-相同方式共享 4.0" 转载请保留原文链接及作者。