编程作业-卷积基础1

1 | import numpy as np |

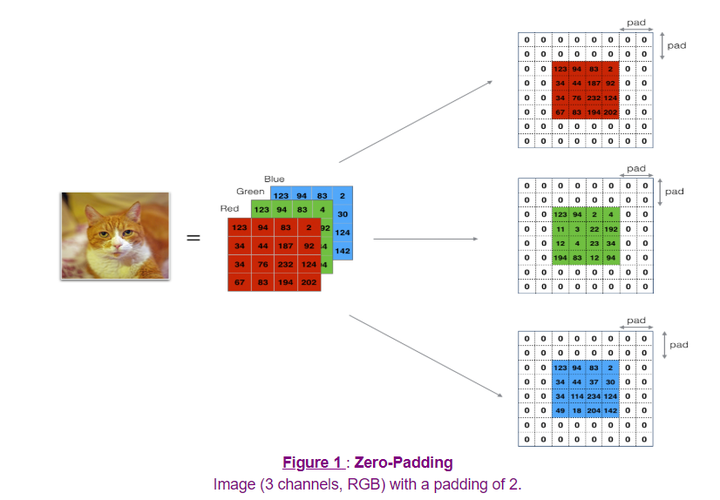

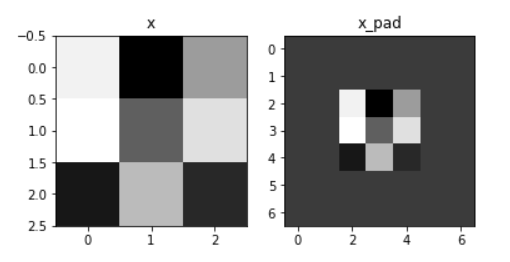

Zero pad

1 | def zero_pad(X, pad): |

1 | np.random.seed(1) |

x.shape = (4, 3, 3, 2)

x_pad.shape = (4, 7, 7, 2)

x[1,1] = [[ 0.90085595 -0.68372786]

[-0.12289023 -0.93576943]

[-0.26788808 0.53035547]]

x_pad[1,1] = [[ 0. 0.]

[ 0. 0.]

[ 0. 0.]

[ 0. 0.]

[ 0. 0.]

[ 0. 0.]

[ 0. 0.]]

<matplotlib.image.AxesImage at 0x151c1be9da0>

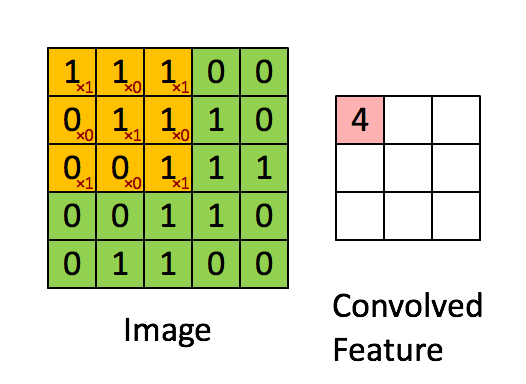

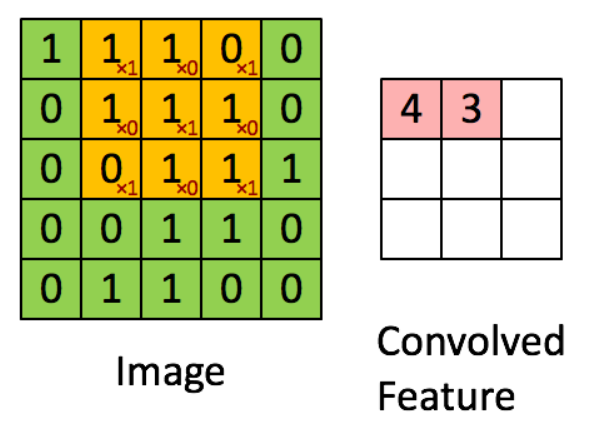

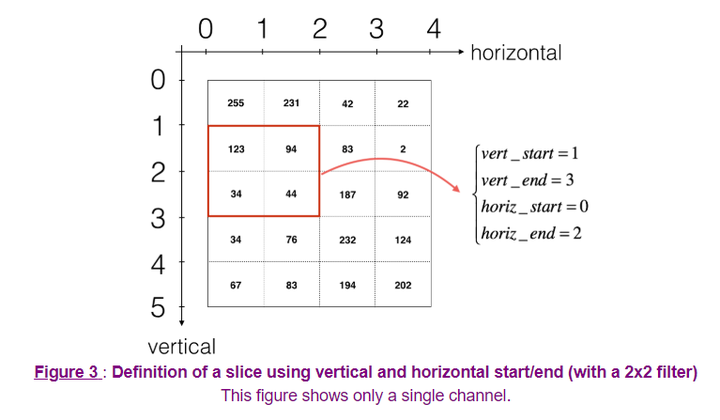

单步卷积

1 | def conv_single_step(a_slice_prev, W, b): |

向前传播

1 | def conv_forward(A_prev, W, b, hparameters): |

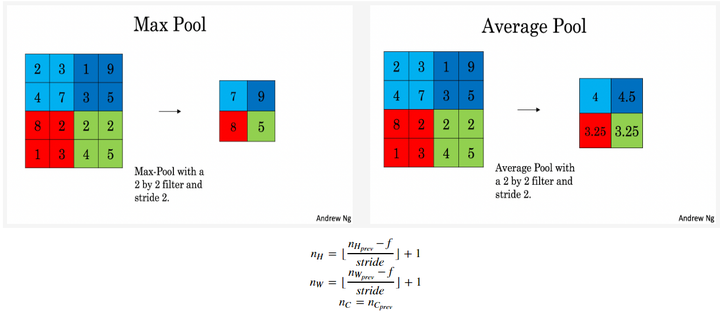

向前池化

1 | def pool_forward(A_prev, hparameters, mode = "max"): |

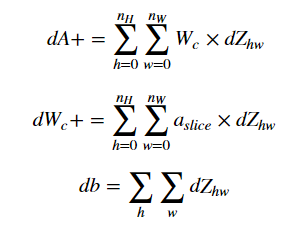

向后传播

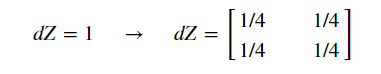

1 | def conv_backward(dZ, cache): |

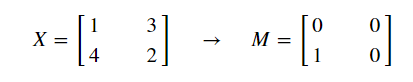

1 | def create_mask_from_window(x): |

1 | def distribute_value(dz, shape): |

向后池化

1 | def pool_backward(dA, cache, mode="max"): |

转载请注明来源,欢迎对文章中的引用来源进行考证,欢迎指出任何有错误或不够清晰的表达。可以在下面评论区评论,也可以邮件至 2470290795@qq.com

文章标题:编程作业-卷积基础1

文章字数:748

本文作者:runze

发布时间:2020-03-02, 21:12:17

最后更新:2020-03-02, 22:48:59

原始链接:http://yoursite.com/2020/03/02/%E5%90%B4%E6%81%A9%E8%BE%BE%20%E6%B7%B1%E5%BA%A6%E5%AD%A6%E4%B9%A0/04%E5%8D%B7%E7%A7%AF%E7%A5%9E%E7%BB%8F%E7%BD%91%E7%BB%9C/%E7%BC%96%E7%A8%8B%E4%BD%9C%E4%B8%9A-%E5%8D%B7%E7%A7%AF%E5%9F%BA%E7%A1%801/版权声明: "署名-非商用-相同方式共享 4.0" 转载请保留原文链接及作者。